Setting us up the bomb in code

I've always known that scripts were out there and scouring the internet for exploitable websites. It's why the more widely used blogging applications (I'm looking at you, Wordpress) are so widely hacked.

To make my own little positive impact on the world (and because it's fun), I've decided to set up a few traps on my site.

I don't mind telling you about the traps, since it's unlikely that anybody trying to hack my site would actually read the blog. If they did, they'd know that my site is completely static, hosted on AWS S3, and served by AWS CloudFront. I won't say that my site is unhackable, but I will say that it would be difficult and there would be little to gain. There's no database, no credentials, and no secrets. Also, recovering from a hacked site would take me less than a minute.

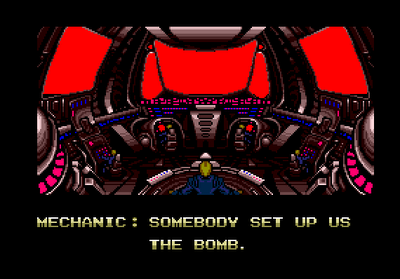

So, on to the trap– the gzip bomb.

So, on to the trap– the gzip bomb.

Gzip, a variant of the zip file you know and love, is very good at compressing repeating data. What if I could take a bunch of data, compress it, and somehow convince script kiddies to decompress the data causing their script to slow down, fill their hard drive, and maybe even crash some things? In fact, it's not terribly difficult.

As part of optimizing your website, you ought to compress your text content, using gzip, and instruct your visitor's browser to decompress the content prior to displaying it. This makes sites load much faster, since they're downloading less data, and it's not very difficult to do– most web servers handle this automatically or with easy configuration. The browser should download a gzip file and the HTTP response should have a Content-Encoding header of gzip. That header is the instruction to the browser to decomress the content.

So, to set up the bomb, I'll create a gzip file containing a bunch of garbage data–repeating zeros–, name it something an exploit script will absolutely look for, and upload it to my hosting.

After taking a look at my CloudFront usage report, I see that I often get scanned and the most common thing people are looking for is the /wp-login.php file. This is the file that processes Wordpress admin logins, so it makes sense that it would be top of my list.

So, with a handy flick of the wrist:

dd if=/dev/zero bs=1024 count=10240000 | gzip > wp-login.php

aws s3 cp wp-login.php s3://my_bucket/ --content-type "text/html" --content-encoding "gzip"

I have created a 10MB file, called wp-login.php that extracts to 10GB of nothing. It only takes about a minute to create the file. It does not extract a file that is 10GB, nor is it repeating zero characters– it's binary zeros … null characters. Trying to extract the data will result in simple nonsense, but the attempt will still be made and that's the important part.

It exists now, on my site. I won't link to it because it's possible that if you clicked that link it would crash your browser (although it seems that Chrome handles it pretty well, albeit slowly).

Am I sure this will inflict pain upon the lower levels of the internet hacker community? No, but it costs me nothing to do and I am an optimist at heart.